Scrape Hacker News newest posts with Mechanize

First post in 2016 year! I am very happy!! Today we will scraping newest posts from Hacker News. I think all know what is it.

HN has crazy markup, really. But it’s not a problem for us.

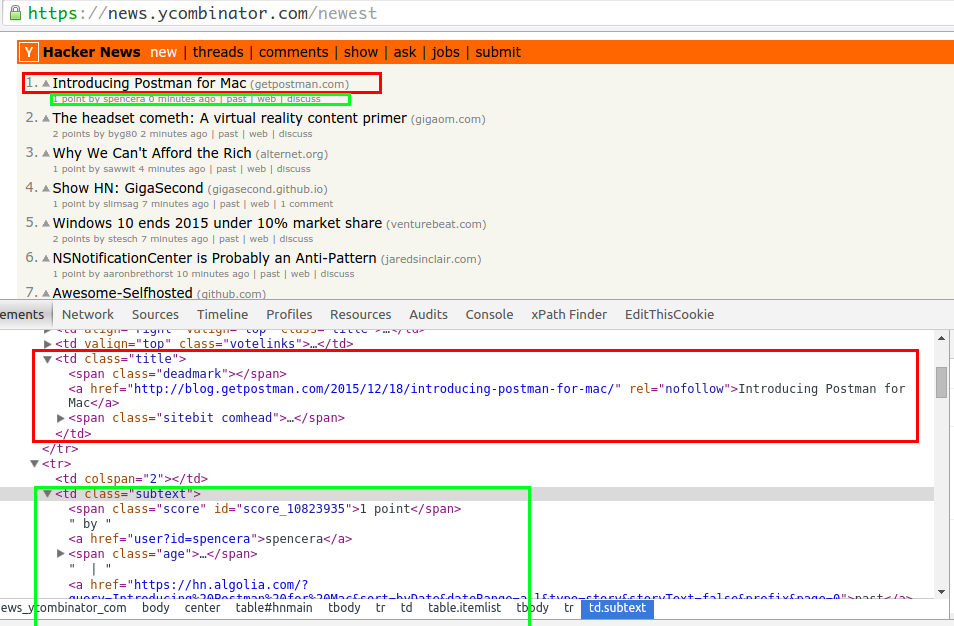

What we have:

<table> and a lot of tr with needed data. In <tr> with class athing we have a title of posts(with site domain) it’s red square. After in separate tr we have post detail(like: author, points etc) it’s blue square.

Scrape HN posts

Today we will use awesome gem mechanize

gem install mechanize

Make initial commit(5161dda) it’s not informative, just simple preparing.

Create mechanize instance, I use user_agent just for fun.

@agent = Mechanize.new do |agent|

agent.user_agent_alias = 'Mac Safari'

end

Now we make method for parsing on a page and add BASE_URL constant.

BASE_URL = 'https://news.ycombinator.com'

def parse_links

@page = @agent.get "#{BASE_URL}/newest"

rows = @page.search("//tr[contains(@class,'athing') or "\

"contains(.,'point') and "\

"not(contains(.,'More'))]")

rows.each_slice(2) do |row_pair|

current_data = scrape_header_row(row_pair.first)

current_data.merge!(scrape_detail_row(row_pair.last))

break if @data.include?(current_data)

@data << current_data

end

end

Mechanize has an interesting methodology for working with page. After each action like click on a link or submit form we always have a new page and should work with changed page.

For opening/getting page mechanize use #get method. After in @page variable we have instance of Mechanize::Page

@page = @agent.get "#{BASE_URL}/newest"

After we should find needed rows. For finding mechamize use #search method.

rows = @page.search("//tr[contains(@class,'athing') or "\

"contains(.,'point') and "\

"not(contains(.,'More'))]")

We have needed data in different rows, I separate rows with different collor.

How work #each_slice(2), simple example:

[1] pry(main)> [1,2,3,4,5,6,7,8,9,10].each_slice(2) do |item|

[1] pry(main)* p item

[1] pry(main)* end

[1, 2]

[3, 4]

[5, 6]

[7, 8]

[9, 10]

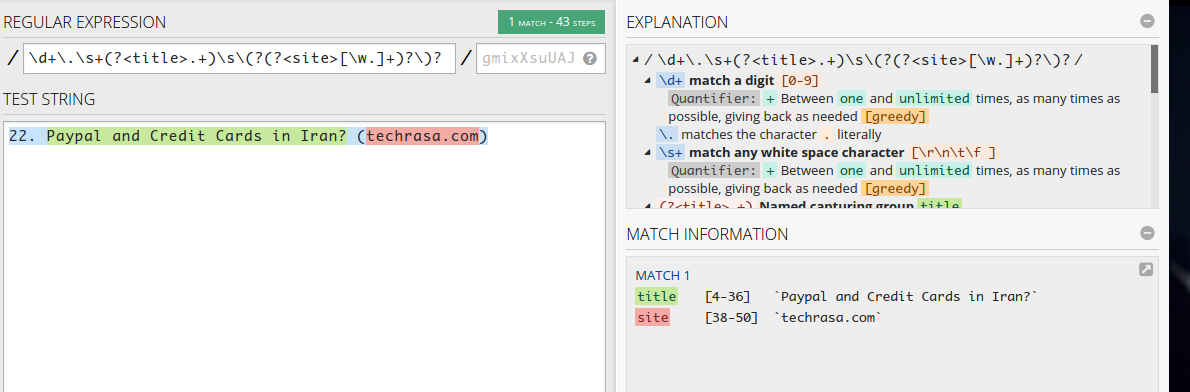

We scrape first row via regex

def scrape_header_row(row)

header = row.text.match(/\d+\.\s+(?<title>.+)\s\(?(?<site>[\w.]+)?\)?/)

{ title: header[:title], site: header[:site] }

end

Of course, we can use row.at(....) for finding title but we use it on the second method. Regex is more fun :)

For second row we use #scrape_detail_row method. Find discuss link, points, author name, post link and make full_url (just for fun).

def scrape_detail_row(row)

discuss_tag = row.at(".//a[contains(.,'discuss') or "\

"contains(.,'comments') or "\

"contains(.,'comment')]")

duscuss_link = discuss_tag[:href]

{

id: discuss_tag[:href][/\d+$/].to_i,

point: row.at(".//span[@class='score']").text,

user_name: row.at(".//a[contains(@href,'user?id=')]").text,

link: duscuss_link,

full_url: "#{BASE_URL}/#{duscuss_link}"

}

end

Now we have all posts from one page, but we need parse all pages. For it, we must click on More link. Make simple method for it.

def view_more(pages = 1)

page_num = 0

loop do

yield

next_link = @page.link_with(text: 'More')

break if page_num > pages || !next_link

page_num += 1

@page = next_link.click

end

end

And update #parse_links method, add pages variable for number of parsed pages. And limit_id for limited parsed pages and posts.

def parse_links(limit_id = nil, pages = 1)

@page = @agent.get "#{BASE_URL}/newest"

view_more(pages) do

#... The same things

return @data if limit_id && current_data[:id] < limit_id

end

end

It’s all, it used very simple:

parser Parsers::HackerNews::NewLinks.new

parser.parse_links(nil, 5) # it mean: we parse only 5 pages without needed id

Summary

We make a simple parser for Hacker News. If you have some question or proposition send pull request WScraping/hacker_news. If you like this post you can show next post about scraping jobs from hacker news

Stay in touch

Hi, I'm Maxim Dzhuliy. I will write about programming, web scraping, and about tech news.